Artificial intelligence (AI) and machine learning (ML) have rapidly become an increasing presence in many aspects of society during the past several years, and HR is no exception. Hundreds of vendors have brought AI-enabled HR products to market, and many organizations appear eager to incorporate the latest technologies into their HR operations and reap the potential benefits. But as with broader societal applications of ML and AI, it is imperative that HR leaders be fully informed of both the opportunities and risks, and to be intentional and holistic in planning for the outcomes these technologies can create.

AI and Messy Reality

“Despite all the deep-learning hype, AI struggles with the messiness of reality1.” This statement from Clive Thompson, appearing in Wired earlier this year, encapsulates some of the expectations and results associated with AI and ML to date. Impressive advances abound – robotics, chatbots, recommendation engines – showcasing the speed and predictive accuracy at which this technology can operate. But these tend to be well-defined domains, with limited (albeit expansive) numbers of variants in their respective universes of possibilities. And in many of these cases, the cost of misunderstanding, misinterpretation or misclassification is relatively low. For example, a consumer might find it annoying to receive product recommendations or targeted ads that are off the mark, but that is a low-stakes outcome that’s more than offset by the increased sales associated with successful targeting. And, iterating on these models to improve predictive accuracy is also relatively low stakes, providing ample opportunity to get it right with negligible collateral damage.

Contrast this with scenarios where high-stakes decisions are involved, and where the consequences of getting it wrong are high. Likelihood to recommit a crime, credit worthiness, purported presence at a crime scene based on facial recognition – these are all examples of prediction where the outcomes have potentially life-altering consequences for the individuals who are subject to the algorithms’ outcomes. These are the types of predictions that are based on messy reality, the very area where AI can struggle.

Technological Innovation and Accountability for Outcomes

Rushing to apply these technologies to these messy human areas can, in fact, have dire consequences, especially when coupled with a lack of accountability for those consequences. Consider social media platforms. If we reflect on what some of these algorithmic technologies purportedly set out to do, the vision can be quite compelling: connecting people around the globe who otherwise wouldn’t be connected; providing life-saving emotional support for people experiencing hardships, who otherwise wouldn’t have found each other; giving voice to the voiceless, power to the powerless, and changing the world for the better.

But in unleashing algorithmic-driven technologies on societies, that vision bumped up against messy reality. While some profoundly positive outcomes have resulted, alongside them, many gut-wrenchingly awful ones have as well: cyberbullying; microtargeted disinformation campaigns; bigotry, misogyny and a plethora of hate-driven and life-threatening behavior at scale; chipping away of core societal foundations; and threats to the very survival of democracy as we know it. And what has the response been from the companies responsible for these outcomes? For the most part, a collective shrug: we’re just a platform; free speech trumps all; let the audience interpret the content and decide on its veracity.

Given the outcomes to date, that is not good enough. More accountability is needed. Business and HR leaders can lead the way by embracing accountability when bringing this technology to the world of work, remaining hypervigilant about the full spectrum of outcomes being created and taking action accordingly.

The Value of (Accountable) AI for HR

It’s important to consider the benefits AI can bring to HR, and indeed the potential is substantial. From better employee and candidate experiences to reduced time-to-hire, increased quality of hire and improved efficiency and effectiveness, AI and ML can bring measurable value across the talent lifecycle. A comprehensive view of one company’s experience, IBM, has been shared previously2; a few highlights follow.

Creating a Better Candidate Experience

One example is creating a better experience for job seekers. IBM achieved this by implementing an AI-enabled conversational experience on the company career site that personally engages with job seekers and recommends jobs based on candidates’ skills and experiences. The result is a better experience for people exploring IBM as a place to work, with high levels of interaction with the system and higher rates of people clicking through to apply for jobs. Specifically, 86% of people on the careers site opted to interact with the system, asking questions or pursuing job matching; 96% continued interacting with the system after job matches were surfaced; and 35% clicked through to apply to the recommended jobs (compared to an industry average of 9% applying from traditional career site navigation).

Helping Recruiters with Real-Time Insights

Another example is helping recruiters do their job better, by providing real-time insights embedded in the recruiting workflow. A matching algorithm compares skills extracted from a job requisition with those extracted from candidate resumes or CVs, returning a match score that allows recruiters to quickly assess candidate fit. The technology includes built-in capability to detect any evidence of adverse impact (that is, systematic differences in selection rates based on the scores, across demographic groups) to flag and mitigate any indicators of bias. Open job requisitions are also prioritized by the system, to help recruiters optimize their time spent filling open positions.

Results include a five-day reduction in cycle time vs. those not using the match scoring, and importantly, better candidate quality: 16% higher than recruiters not using the match scoring.

Embedded Intelligence and Quantified ROI

Additional IBM HR implementations of AI include intelligence to better inform managers’ compensation decisions, predictive analytics and targeted interventions to reduce unwanted attrition, personalized learning recommendations and career guidance to help employees develop their skills and grow their careers, and 24X7 chatbot support for everything from benefits selection to travel-related questions to new hire onboarding.

And all told, IBM calculated the financial benefits at over $100M per year, while creating better experiences across personae (employees, candidates, recruiters, managers, etc.) as evidenced by net promoter scores. Substantial benefits are clearly attainable, and importantly, IBM took steps along the way to deploy this technology responsibly. Doing so requires hard work, resolve, and a fundamental belief in ethical AI and getting it right.

Doing the Hard Work to Get it Right

Bringing AI to any domain is hard work, and HR is no exception. If you want to get the right outcomes it’s incumbent on anyone bringing this technology to HR to understand the risks and how to mitigate them, to understand the full spectrum of outcomes you’re creating, and to be fully accountable for those outcomes.

Part of doing the hard work involves understanding the pitfalls such as bias: how it creeps into algorithmic prediction and decision-making, how to identify it, and how to mitigate it. We need to acknowledge it, and we need to address it. We cannot simply shrug it off.

Data Science + Domain Expertise

The approach taken at IBM is to combine domain expertise with data science. In the case of HR and making job-related decisions about people, that expertise can be found in industrial-organizational psychologists and HR professionals. The field of I-O psychology has produced decades of research on how to measure people in the context of work, how to use those measures to inform decisions, and understanding and dealing with human biases in decision-making.

The unique challenge with AI and machine learning is the potential to systematically incorporate biases at remarkable speed and scale. There’s an urgent need to address this, and a useful approach is to bring together data scientists and I-O psychologists to incorporate the best thinking from both disciplines. Data scientists tend to focus on maximizing prediction. I-O psychologists, while also concerned with prediction, are highly focused on measurement – understanding what’s being measured, how well it’s being measured, and that the measures work equally well for everyone. For example, if we’re interested in cognitive ability, we might measure it with an intelligence test. If two people from different groups have equal levels of cognitive ability, they should score similarly on the test. If they don’t, there’s evidence of measurement bias. Another type of bias is relational bias, when scores predict an outcome well for one group but not for another. These biases are problematic, and I-O psychologists know to look for them and correct for them. Some methods include adjusting the relative weights of predictors, using score banding, and broadening the measures of success the models are predicting.

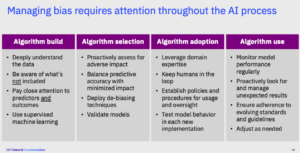

Combining this perspective and approach with data science expertise provides a roadmap for ethical and accountable AI for HR, with action taken at all stages of algorithm creation and deployment: algorithm build, selection, adoption and use (see Figure 1).

Figure 1. Roadmap for ethical AI in HR.

Concluding Thoughts

By combining sound data science with domain expertise, business and HR leaders have an opportunity to reap the benefits of bringing AI to HR while preventing collateral damage, such as perpetuating historical biases at scale. This requires recognizing the messy reality and complexity of people-related prediction, thinking holistically about the full spectrum of outcomes algorithms can create, and doing the hard work necessary to get it right. To borrow words of wisdom from DJ Patil, former US Chief Data Scientist, the prudent approach is to “move purposely and fix things.”

References

1Thompson, C. (Feb 18, 2020). AI, the Transcription Economy, and the Future of Work. WIRED

2Guenole, N. and Feinzig, S. (2018). The Business Case for AI in HR with Insights and Tips on Getting Started

https://www-01.ibm.com/common/ssi/cgi-bin/ssialias?htmlfid=81019981USEN