Point: Artificial Intelligence (AI) Must Be Part of Human Capital Management – by James Jeude

‘The purpose of Human Capital Management is to acquire, manage, and optimize the workforce that drives a modern business and powers the achievement of its mission and financial goals.’

Perhaps this sounds a little dull when reduced to this, yes? I’m pretty sure those of us who practice HCM are attracted to our chosen field for a reason: it is here, in managing the intersection of business, society, teams, and individuals, that we find a rich opportunity to be helpful and make an impact – to give a more diverse and complex answer than just saying ‘my job is to acquire, manage, and optimize people’.

Here in HCM we throw ourselves into a fascinating mix that brings rules, policies, and structure to a target audience that has the human traits of unpredictability. A target audience that, it seems, is all too ‘human’ at times. But this makes HCM one of the best and most interesting jobs in business.

And with the ‘human’ side of HCM comes a new wave of technical, business, and psychological innovations to better understand how we think, act, and perform as humans in the course of our jobs.

Perhaps the most exciting development in the past few years has been the rise of Artificial Intelligence (AI). When Artificial Intelligence was first formed as a phrase in 1956, computing was in its infancy. The technical reasons for AI’s sudden burst into relevance in the past few years is beyond the scope of this discussioni, but now that it’s been unleashed, it’s not possible to match or exceed the power of your competition without including AI in the mix.

I see from my own experience that AI is a beneficial partner to HCM. Four points will converge as I make my case: (1) a definition of AI that aligns with the human resource channel, (2) quantitative measures that assist in building an unbiased view of the talent pool, (3) qualitative tools that augment and enhance the HCM function, and (4) cautions and reminders.

Evidence shows that AI is an effective companion in talent management – when used wisely, and following cautions and reminders can make the difference between success and failure.

Let’s start with definition. What exactly is AI, and how does it apply to HCM? My favorite definition is simple: AI learns by example. This definitionii is also powerful: think of the amazing feats of cognitive reasoning that are undertaken for an animal or a child to recognize patterns and people, to tell a cat from a dog by looking at many examples, and to identify completely new experiences based on past exposure. We don’t ‘program’ our brain by first learning the features of a cat in an if-then-else algorithm; we learn by example. AI does the same.

Why is ‘learn by example’ so important? Complexity and speed are the culprits. We are long past the stage where our biggest problems are precisely defined. Computers started in the 1940s calculating military trajectories, and moved to calculating balance sheets and income statements. These were classic ‘known problem’ situations. We still have a few similarly precise examples in HCM, such as following mandatory requirements for EEO reporting formats, but today’s most important tasks of HCM have no formal equation to guarantee success and don’t respond to traditional programming.

In quantitative terms, AI also shines as well. It may seem counter-intuitive to use AI instead of classic statistics to help with basic measurement of workforce effectiveness, diversity, and attrition, but in real life the number of signals and combination of views of the data exceed our limited abilities as humans. AI can automate the tasks of ‘data science’ and widen its appeal, finding patterns that drive attrition in seconds that would take a data scientist weeks.

And finally, let’s look at AI’s role in the hottest areas of HCM, namely, the qualitative factors that enter into everything from recruitment screening to the hiring experience to management feedback, promotions, attrition, and beyond.

Let’s look at where AI-assisted HCM/TM has proven its value:

- In attrition management, AI helps with quantitative analysis. It can easily reach into a wide range of digitized factors and unstructured data and calculate more accurate predictions, with prescriptions. Traditional regression testing approaches struggle under the weight of today’s digital signals. IBM published that they can predict with 95% confidence if someone is likely to leave in the next six monthsiii. Caution: some factors aid in the model accuracy, such as commute time, but can’t be controlled or used by the company in decision making. For example, you can’t choose a short-commute candidate over a long-commute candidate for a promotion because AI told you that the first candidate is likely to leave sooner.

- In the recruitment cycle, AI is already well known to aid in the qualitative side of extracting key features from CVs and resumes, and does a terrific job normalizing words and identifying variations and word stems to profile the answers. Mega-companies like Marriott had 2.8 million applications in 2018, and numbers like this simply can’t be managed without AI assistanceiv.

- As the recruiting cycle progresses, AI interviewing is now playing a role for some companies in the both the Q&A prescreening and even actual visual (face to face or video conference) interviewingv. This trend contains both promise and risk. In theory, AI’s consistency means that a candidate from mid-day and candidate from end of day get the same treatment; in real life, that’s not the case due to human energy cycles. But, when AI is trained to recognize ‘body language’ it must, by definition, be carrying biased definitions of normal behavior from the training set. Someone with entirely different gestures or movements could be incorrectly flagged as showing the wrong emotions for the needed situation and never pass to the stage of human interaction with your companyvi.

- The review and career planning cycle has long been one of HCM’s most difficult challenges. Differences in personality of manager and employee alike, in situations, in granularity, and in documentation quality make like-to-like comparisons between employees difficult. AI can help in this regard, and I consider it to be indispensable, provided it’s used in two ways: First, AI can ensure completeness and prepare a fact sheet for the manager by making sure harmonized and relevant information on job history, experiences, citations, and the like are gathered in one place. (AI is needed here because of complexity and volume.) Then, AI can look for key action phrases and recommend courses of action and courses of study to map the employee’s aspirations against the path to arrive there. The caution here is to, as always, allow AI to suggest but not demand a particular action – the AI may not have been trained on a particularly complex situation for a given employee involving medical, personal, or even local condition issues (weather, war, epidemic)vii.

Here are some cautions to my enthusiasm for AI.

- AI ‘learns by example’, and if the examples are biased, it may continue that pattern in an automated manner and those biases become persistently embedded in the logic. Example: feed AI a set of attributes of previous Senior VPs and C-suite members, and it may conclude that being a Boy Scout or playing football is a predictor of success, even if you’ve explicitly removed gender as an attribute. Common sense and hard objective sensitivity testing are needed to check model results.

- The elements that AI can use to more accurately predict an outcome in aggregate may not be usable in determining actions for an individual. Example: AI attrition calculations, such as the IBM example mentioned above, use commute time as a factor. But a manager should not and cannot favor one candidate for promotion over another because of commute time, even if the computer predicts a more likely attrition for the long commuter. It’s critical and may be legally necessary to distinguish when AI is predicting in the aggregate and when decisions can be made at the individual level as a result.

- AI should focus on what machines and processes do well, not what humans do well. I advise clients to look for tasks that humans do repetitively for AI to replace, not for tasks that humans do creatively. Have AI create the briefing book before annual reviews, to process the text in CVs (everyone does this anyway, but do it against a trained lexicon), to predict broader trends but not individual actions, and to gather statistics for management and regulatory agencies. Do NOT let AI make hiring decisions, create unquestioned scripts for reviews, provide ratings or rankings without human review, or be trained on historical data without a thorough understanding of the historical biases that may be perpetuated.

HCM is about humans, and organizing us to action for the greater good of our employers, their markets, and society. The complexity and speed of signals from the market and the workforce will overwhelm already-stressed HCM departments that reject AI. AI must be part of every successful company’s HCM practices. But that part must be in assistance, advice, and grunt work. If you hand over all of HCM to AI, you will fail – perhaps spectacularly and publicly. If you embrace AI, you can thrive.

Resources

iA good starting point is this thoughtful comparison of AI versus other technologies, and why it’s become prominent today. https://www.cognizant.com/whitepapers/the-ai-path-past-present-and-future-codex5034.pdf

iiMalcolm Frank, Paul Roehrig, and Ben Pring What to Do When Machines Do Everything, p48 articulates this definition in detail

iiihttps://business.linkedin.com/talent-solutions/blog/artificial-intelligence/2019/IBM-predicts-95-percent-of-turnover-using-AI-and-data

ivhttps://nypost.com/2017/12/04/ai-already-reads-your-resume-now-its-going-to-interview-you-too/

vIbid.

vihttps://www.businessinsider.com/body-language-around-the-world-2015-3

viihttps://www.weforum.org/agenda/2019/07/pay-raises-could-be-decided-by-ai/

viiihttps://www.theguardian.com/technology/2018/oct/10/amazon-hiring-ai-gender-bias-recruiting-engine

Counterpoint: Artificial Intelligence for Human Capital Management: “Not Ready For Prime Time?” – by Bob Greene, Ascentis

One of my all-time favorite old movies, now 52 years old, is “2001: A Space Odyssey.” Screenwriter/director Stanley Kubrick and book author Arthur C. Clarke were way ahead of their time, despite the incorrect prediction of the year when artificial intelligence would be perfected, that it could threaten to replace humans. And the satire was nearly perfect; Kubrick and Clarke weren’t just warning us of the day in the future that AI would take over, but also that … well … it might not work right on its maiden rollouts! Many “2001” fans cite, as their favorite line, the computer, HAL’s, announcement, “I’m sorry, Dave. I’m afraid I can’t do that”, signaling the revolt of AI against its owners and developers. However, I prefer when HAL informs Dave, “I know I’ve made some very poor decisions lately” – the perfect, ridiculous, ironic accusation by the script writers that AI won’t necessarily be ready for prime time on its first introductions.

As entertaining as that movie was and still is, it was intended as satire — hardly a serious prediction of the course of the initial debut of AI, right? I thought that, until I read a Reuters article1 published on October 9, 2018, entitled, “Amazon scraps secret AI recruiting tool that showed bias against women”. In an opening line that rivals the words from “2001” for irony, the article begins “Amazon.com Inc’s machine-learning specialists uncovered a big problem: their new recruiting engine did not like women.”

The absurdity of this article’s opening statement is as obvious as it is profound: artificial intelligence programs cannot “teach themselves” to dislike women. Can they? Two things should pop to mind in trying to make sense of this strange result for anyone with even passing familiarity with AI: (a.) AI programs teach themselves to produce better results based on the input they are given over time, and (b.) AI programs are written by human beings, who carry with them all the experiences, biases and possibly skewed learning that we’d hope AI programs would not absorb and reflect.

As just one small but very typical example of the kinds of flaws that the Amazon article cited, the Recruiting program taught itself that when it encountered experiences like “women’s chess club champion” vs. “men’s chess club champion” in a resume being evaluated, the women’s designation was less valuable in terms of “points assigned” (or ranking) than the men’s designation, because more men with the designation were successfully chosen for hire than women. But that result was caused by an intervening event: a human recruiter, or manager, or CHRO, made the hiring decision. And the article points out that at the time it was written, the gender imbalance in technical roles at four of the top technology firms was so pronounced it could not have been coincidence – Apple had 23% females in technical roles, Facebook had 22%, Google had 21% and Microsoft had 19%. (At the time the article appeared on-line, amazon did not publicly disclose the gender breakdown of its technology workers.) The AI program, for whatever reason, was guilty of perpetuating previous gender bias. According to the article, after multiple attempts to correct the problem, amazon abandoned the AI Recruiting effort.

Employees Are Different

At the risk of stating the obvious, the programming techniques used in AI development are different depending upon the objects being reported. Let’s take some examples: a head of lettuce (because food safety is a hot topic now), a virus (because, well, that is the HOTTEST of topics right now), and an employee or applicant – a human being. Let’s also look at some of the characteristics of each class of object and how those might advance, or limit, our ability to successfully deploy AI for them.

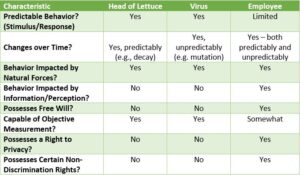

Fig. 1: AI Development Challenges: Humans Are Different

Whether the objective of a particular AI effort is predictive analytics, substituting machine-made decisions for human ones, or gathering new and valuable characteristics from a group of objects by analyzing big data (in ways and at a speed that efforts unaided by AI could never accomplish), the above truth table begins to shed light on what makes any HR-related AI design effort inherently more difficult than other efforts.

AI Utilization: The Good, The Bad…And The Non-Compliant

There are certainly examples of AI applications in HR that can navigate these “rapids” and still manage to deliver value. These tend to be the queries that are based on well-documented human behavior, have no privacy issues and can still offer helpful insights. Analyzing a few years’ attendance data to predict staffing levels the day before or after an upcoming three-day holiday weekend is a good example.

But things can get murky when more complex stimulus/response mechanisms are introduced. For example, we can predict quite accurately the rate of decay of a head of lettuce based on environmental factors like heat, humidity and sunlight. We can predict, with somewhat less accuracy, the response of a virus to the introduction of a chemical, like an antiviral agent, into its petri dish. But extend that example to employees: how accurately can we predict cognitive retention rates for a one-hour training session we offer? Such predictions would be vital for succession planning and career development, and maybe even for ensuring minimum staffing availability for a line position in a sensitive manufacturing sequence. How accurately can we predict the impact on a tight-knit department or team, of introducing a new manager who is a “bad cultural fit” – if we can even objectively define what that means? How accurately can we predict the favorability of a massive change to our benefit program slated for later this year. Such predictions would be vital to avoid declines in engagement and potentially, retention.

When we turn to compliance, even more roadblocks to AI present themselves. Heads of lettuce have no statutory rights to be free from discrimination, but employees do. Viruses have no regulatory-imposed right to privacy, but employees in Europe, California, and various other jurisdictions do. In supply chain management, we can feed into our new AI program a full history of every widget we’ve ever purchased for manufacturing our thing-a-ma-bobs, including cost, burn rate, availability, inbound shipping speed, quality, return rate, customer feedback, repair history and various other factors. As a result, our AI program can spit out its recommendation for the optimal order we should place – how many, where and at what price. If that recommendation turns out to be incorrect or have overlooked some key or unforeseen factor, no widget can claim its “widget civil rights” were violated, or sue us for violation of the “Widget Non-Discrimation Act.”

But consider the same facts and details as they might be applied to recruiting. Given similar considerations, is it any wonder that a computing powerhouse like amazon.com was unsuccessful in applying AI to resume screening? Further, is it any wonder that the European Union, in Article 22 of the General Data Protection Regulations which took effect in May, 2018, included a provision prohibiting totally automated decision making, including for job applicants, without human intervention2?

Since so many areas of modern HR management inherently involve judgement calls by experienced, hopefully well-trained managers and directors, how do we take that experiential expertise and transfer it to a computer program? We haven’t found a way to do that yet. The decision might be around hiring, staff reductions, promotions, or salary increases, or even simply the opportunity to participate in a valuable management training program. What amazon’s experience has taught us is that, if it possesses benefit to those chosen, and disadvantage – no matter how slight – to those passed over, it also possesses potential liability for an organization inherent to any uncontrolled or unmonitored use of AI.

Not “No”, But Go Slow?

This is not intended to be the average Counterpoint column, because I am not advocating against the use of AI in all aspects of HR Management and its sub-disciplines. It is, however, essential that Human Capital Management professionals consider in their decision-making around design and implementation of Artificial Intelligence programs, the factors that make HR unique: human variability, a compliance framework fraught with limitations and prohibitions, and corporate environmental practices and priorities that are ever-changing.

Endnotes:

1 Reuters Technology News: Amazon Scraps Secret AI Recruiting Tool That Showed Bias Against Women. https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G

2 European Union Commissioners’ Office: Rights Related to Automated Decision Making Including Profiling. https://ico.org.uk/for-organisations/guide-to-data-protection/guide-to-the-general-data-protection-regulation-gdpr/individual-rights/rights-related-to-automated-decision-making-including-profiling/